RoboHop: Segment-based Topological Map Representation for Open-World Visual Navigation

Perturbed Initializations

The underlying object-level reasoning of RoboHop enables robustness to perturbations in its initialization. The robot is able to reach its destination ("ladder") despite variations in the viewpoint of its source node ("white triangular machine", please refer to the explainer video above for more details).

Abstract

Mapping is crucial for spatial reasoning, planning and robot navigation. Existing approaches range from metric, which require precise geometry-based optimization, to purely topological, where image-as-node based graphs lack explicit object-level reasoning and interconnectivity. In this paper, we propose a novel topological representation of an environment based on image segments, which are semantically meaningful and open-vocabulary queryable, conferring several advantages over previous works based on pixel-level features. Unlike 3D scene graphs, we create a purely topological graph but with segments as nodes, where edges are formed by a) associating segment-level descriptors between pairs of consecutive images and b) connecting neighboring segments within an image using their pixel centroids. This unveils a continuous sense of a `place', defined by inter-image persistence of segments along with their intra-image neighbours. It further enables us to represent and update segment-level descriptors through neighborhood aggregation using graph convolution layers, which improves robot localization based on segment-level retrieval. Using real-world data, we show how our proposed mapping can be used to generate navigation plans and actions in the form of hops over segment tracks, directly from natural language queries. Furthermore, we quantitatively analyze data association at the segment level, which underpins inter-image connectivity during mapping and segment-level localization when revisiting the same place. Finally, we show preliminary trials on segment-level `hopping' based zero-shot real-world navigation.

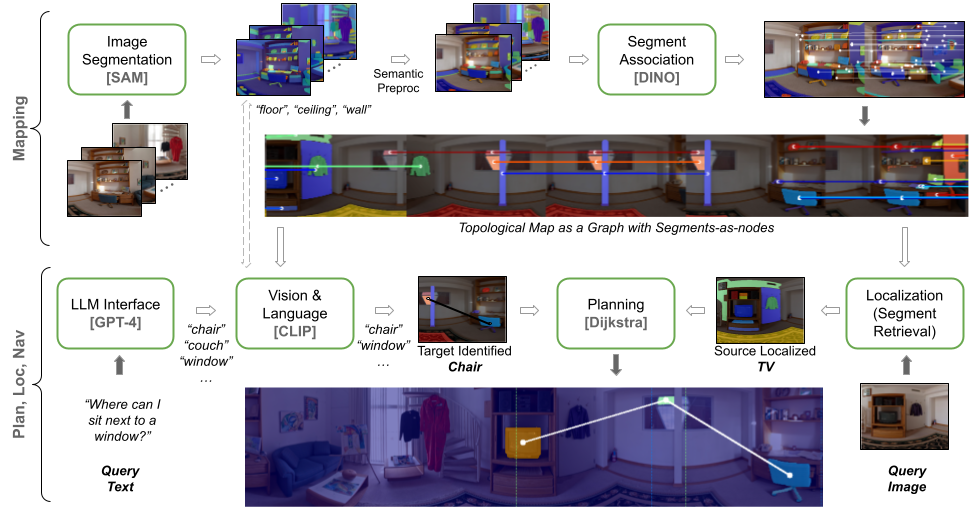

Method Pipeline

An illustration of our overall pipeline from image segments to mapping, open-vocabulary querying, and planning.

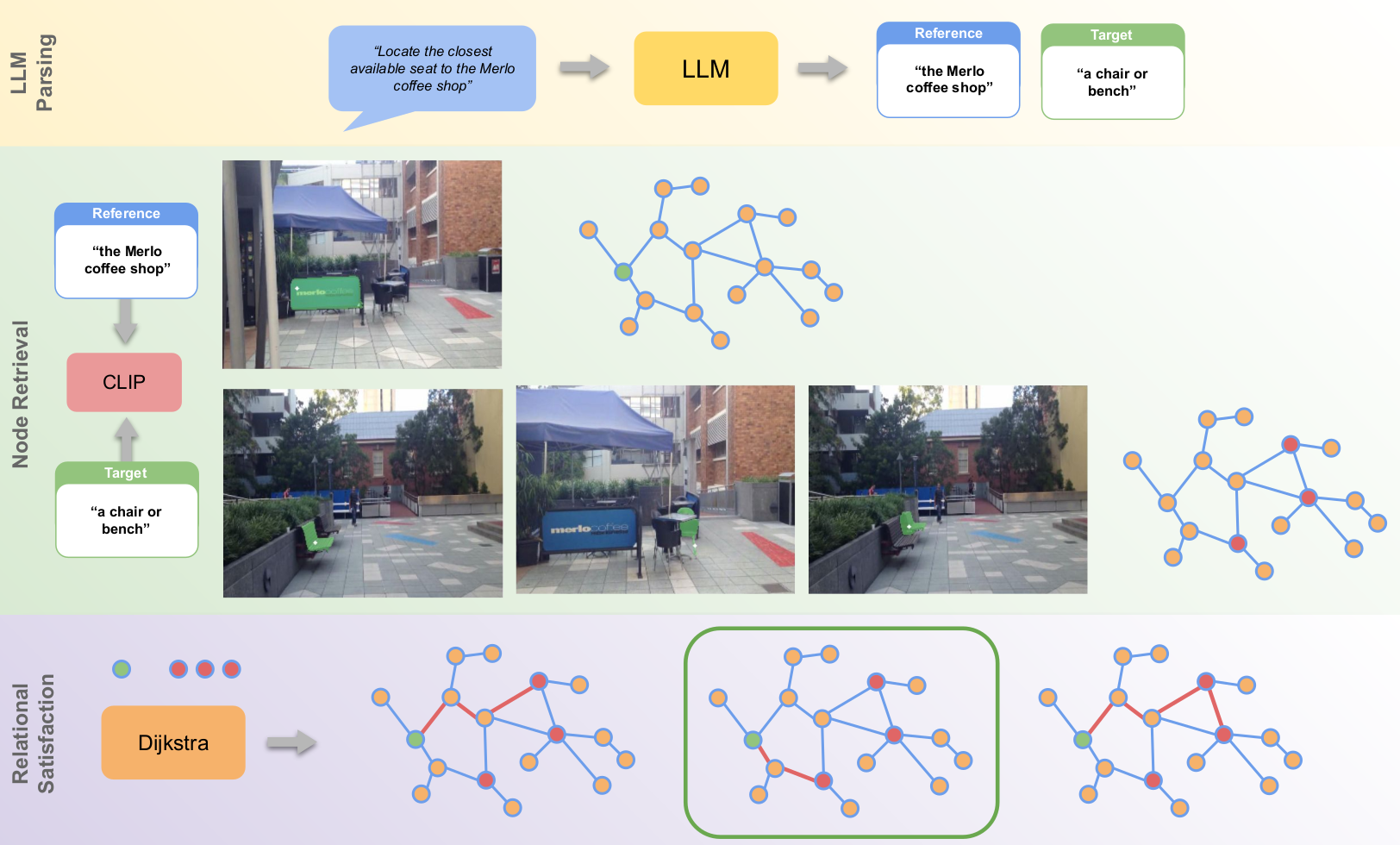

LLM-Based Relational Queries

Using our topological representation, plans can be generated from complex relational queries such as "locate the closest available seat to the Merlo's coffee shop", which exploits the map's ability to capture both intra- and inter-image spatial relationships not present in existing methods. The key here is to identify the target ("chairs or benches") and the reference (to that target, i.e., "the Merlo coffee shop") nodes in the scene based on the relational query. We do this by utilising an LLM appropriately prompted to parse the query and identify textual descriptions of these nodes-of-interest. The descriptions are then processed into feature vectors by CLIP's text encoder which are used to perform node retrieval across all nodes in the map. At this stage, there may be multiple reference and target nodes identified which satisfy the textual description. To satisfy the relational constraint and identify the appropriate target node, we compute the corresponding path length between all the identified reference and target nodes using Dijkstra's algorithm. The target node connected by the shortest path to the reference node is then selected as the node that satisfies the proximity constraint.

BibTeX

@article{RoboHop,

author = {Sourav Garg and Krishan Rana and Mehdi Hosseinzadeh and Lachlan Mares and Niko Suenderhauf and Feras Dayoub and Ian Reid}

title = {RoboHop: Segment-based Topological Map Representation for Open-World Visual Navigation},

journal = {arXiv},

year = {2023},

}